Artificial Intelligence (AI) is transforming industries, from software development to customer service. But one critical bottleneck remains: integration. Traditionally, connecting AI models to external data, APIs, and services has required custom, complex setups—each one tailored for a specific use case.

Enter the Model Context Protocol (MCP), an open standard launched by Anthropic in November 2024. MCP is poised to revolutionize how AI connects with the world—acting as a universal adapter, much like USB-C does for hardware.

In this blog, we'll explore what MCP is, how it works, its real-world applications, benefits, and the road ahead.

What Is MCP and How Does It Work?

MCP is a standardized protocol that allows AI models to interact effortlessly with external data sources, tools, and services. Think of it as a USB-C for AI: a plug-and-play system that replaces the old M×N problem (every app needs to integrate with every tool) with a scalable M+N model.

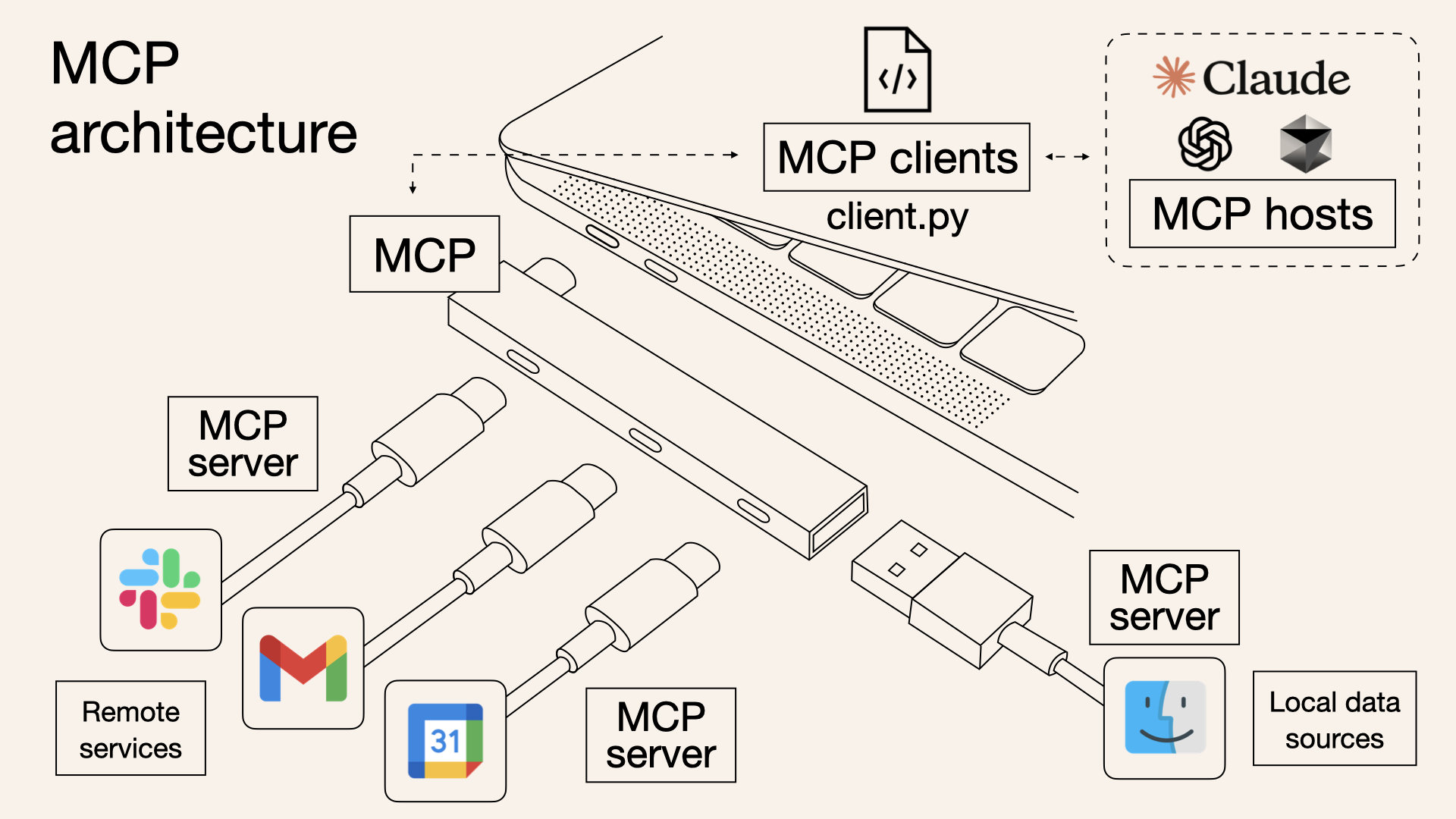

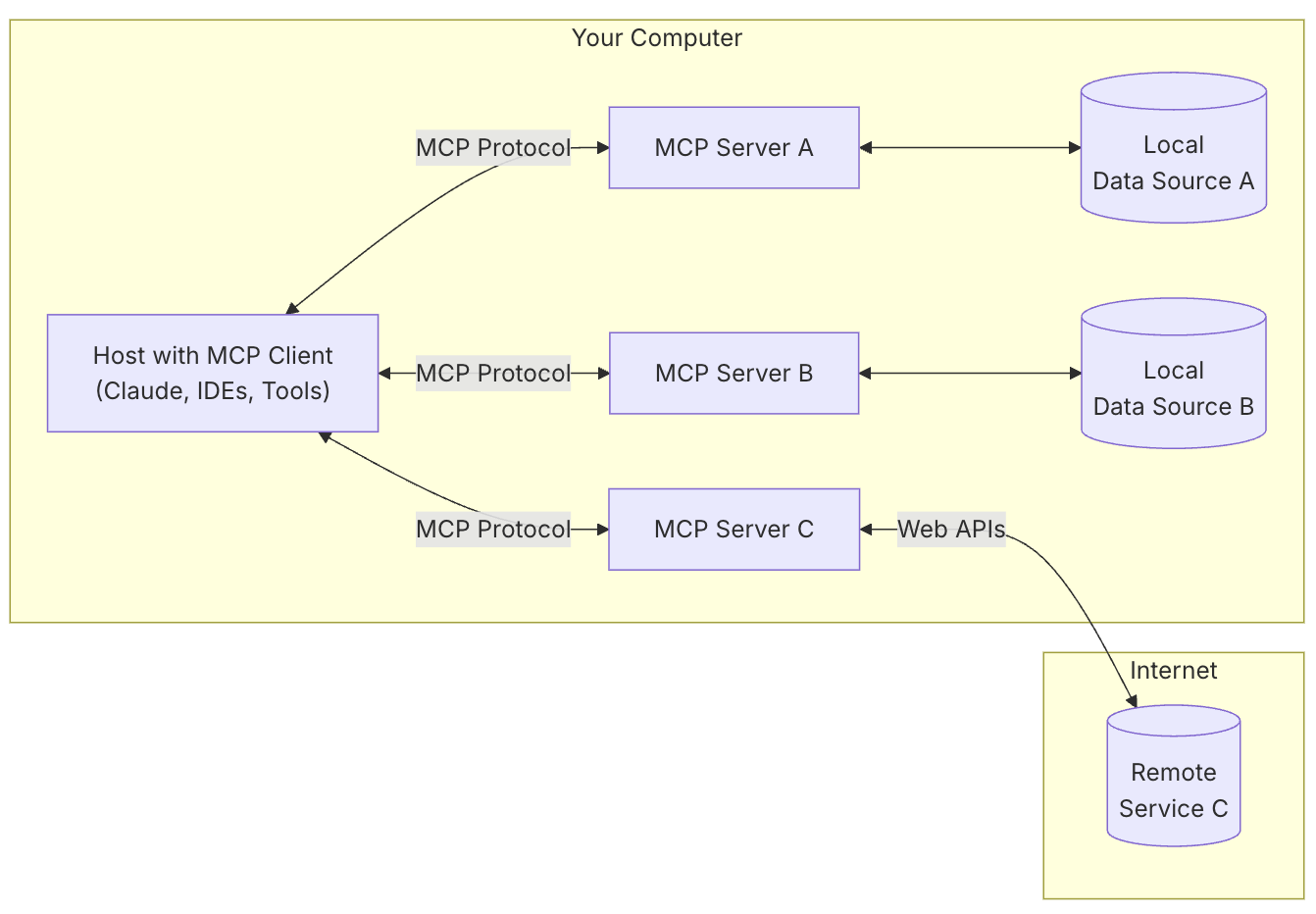

MCP's architecture consists of three essential components:

- MCP Hosts: AI-powered applications like Claude or development environments (e.g., IDEs) that users interact with.

- MCP Clients: These live inside the host and manage communication.

- MCP Servers: Lightweight programs that expose APIs to tools, data, and prompts.

MCP defines three key primitives:

| Primitive | Controlled By | Description |

|---|---|---|

| Resource | Application | Access to data (files, APIs, databases) |

| Tool | Model | Executable actions (e.g., send email, call API) |

| Prompt | User | Templates that guide model behavior |

Communication is powered by JSON-RPC 2.0, with transport mechanisms including stdio (for local use) and HTTP/SSE (for remote streaming).

Figure 1: MCP as a USB-C for AI—standardizing model-to-service interactions.

MCP in Action: Real-World Applications

MCP is already making waves across multiple domains:

Software Development

IDEs like Cursor and Zed use MCP for AI-assisted code completion, refactoring, and GitHub PR management.

Customer Support

AI agents use MCP to fetch CRM data, ticket histories, and generate real-time, personalized support responses.

Enterprise Workflows

MCP provides seamless access to internal databases, Google Drive, Notion, and cloud storage services.

Automation

MCP allows AI agents to trigger Slack messages, automate Gmail workflows, or orchestrate actions through Zapier.

Figure 2: MCP clients link to local and remote servers, abstracting away connection complexity.

Example applications:

- GitHub PR reviewers with built-in commenting

- Real-time weather data via API integration

- Calendar scheduling agents coordinating multi-step tasks

Why MCP Matters

MCP brings several key benefits to the table:

- Simplified Integration – No more custom glue code for every tool

- Reusable & Interoperable – One server supports many AI apps

- Real-Time Context – Boosts model relevance by accessing live data

- Agentic Workflows – Supports autonomous, multi-step task chains

- Self-Hosted & Secure – Maintain local control of sensitive data

- Vendor Neutral – Compatible with Claude, OpenAI, DeepMind, and others

Challenges and Road Ahead

While MCP is promising, it's still evolving. Some current limitations include:

- Evolving Spec – Still in preview, subject to changes

- Authentication Gaps – OAuth and other auth standards are in progress

- Context Overload – LLM token limits can be strained with too many active resources

- Prompt Injection Risks – Especially from untrusted external servers

- UI/UX Gaps – No consistent pattern for tool invocation

Upcoming Enhancements

- Server registries for discovery

- Streaming support via Streamable HTTP

- Video/multi-modal integration

- Agent Graphs for complex workflows

- OAuth-based security and fine-grained permissions

The Broader AI Ecosystem

MCP complements and enhances existing AI infrastructure:

| Method | Description | MCP's Value Add |

|---|---|---|

| REST/GraphQL | Static APIs | MCP enables dynamic discovery |

| Function Calling | Native LLM execution | MCP standardizes execution contexts |

| LangChain | Agent orchestration | MCP provides plugin/tool interface |

| OpenAI Plugins | Proprietary plugin system | MCP is open, flexible, and portable |

It also strengthens Retrieval-Augmented Generation (RAG) pipelines by unifying how retrieved context is formatted and accessed.

Final Thoughts: The Future of AI Integration

The Model Context Protocol is more than just a technical spec—it's a paradigm shift. By offering a universal, modular, and secure way to plug AI into the world, MCP is laying the groundwork for deeply integrated, autonomous, and context-aware AI systems.

As adoption grows and the spec matures, MCP could become the go-to backbone for building the next generation of AI applications.

References

- Anthropic. Introducing the Model Context Protocol

- Anthropic. Why MCP?

- GitHub. MCP PR Review Server Example

- Composio. What is Model Context Protocol (MCP)? Explained